In our previous post, we have developed a basic real-time collaborative application.

In this post, we are going to scale the system.

Our previous application is perfectly fine for small project. Let’s say we have added more features into it and we want to deliver this application to end user.

To make this application production ready we need to make sure this is scalable and always available to our customer.

Vertical scale

It involves upgrading our instance or add extra resource to support increasing workload.

Horizontal scale

Distributed strategy of adding copies running same task in parallel.

Both of them have separate pros and cons and different use cases based on different scenario. But, in enterprise application horizontal scale is quite popular.

Socket scale

In our application, we are using socket to handle real-time operations and socket internally uses TCP port to establish a connection. The maximum number of TCP sessions a single source IP can make to a single destination IP and port is 65,535. So, we can only have maximum 65K of connection at a time with a single instance.

There are two ways to solve this issue,

- Assign IPs to an instance and have ports associated with it. This way we can have maximum connection = number of IP * 65K

- Running multiple instance.

The problem of assigning IPs to and instance is manual workload with limited capacity. For that reason, we’ll be moving forward with multiple instance. And this way we’ll have the benefit of scaling out more as per need.

With multiple instance, there is another issue of socket synchronization. For an example, two user is connected with two different instance. If one user posts an action, other user will not able to respond to it.

To communicate with these sockets, we need a central place to handle synchronization. Any message broker with pub-sub mechanism will solve. For our use case, we’ll be using redis for this.

1 | ... |

Load balance

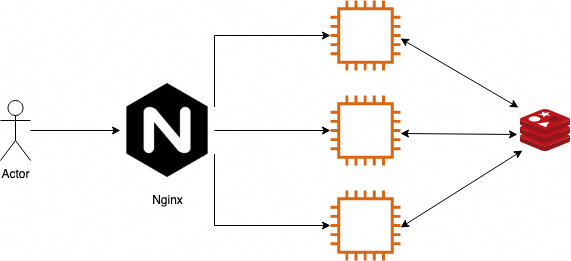

For load balancing purpose, we’ll be using Nginx as this one of the most popular tools having so much resource. We can also choose other platform like HAProxy or anything preferable.

When we are having multiple instances, we need to make sure our socket sticks to the instance it already connected to. If every time it connects or creates a new socket in each server, then we are going to have performance hit for sure.

1 | events { |

To test out our load balancing strategy we are going to run our application using docker compose.

1 | version: '3' |

Deployment strategy

After figuring out all the tweaks and improvements, we can deploy our service to the cloud infrastructure. There can be separate front end and backend application to support our application.

For a production ready application, checklist of workloads needs to be done before going live.

- Deploy our front end application behind Content Delivery Network(CDN) which will support scaling across the global regions and caches the content for faster response

- Deploy our services inside a cluster handling load.

- Autoscale service container based on parameters

- Autoscale server instance based on paramerters like CPU usage, Memory

- Log and monitor requests and act on regular feedback

Final product

Let’s define some requirement to roll out our application to end users. These are some of the basic features can be added or we’ll do a system design for them.

- User can authenticate and authorize

- Realtime collaborative editing

- Document save and retrieval

- Document history

- Comments / feedback

- Notification

Front end

Front end can be developed in any framework and deployed behind a CDN. To have better control over the editor, there are many things to consider about. Like

- Multiple cursor

- Apply style changes

- Import / Export support for differnt format

Backend service

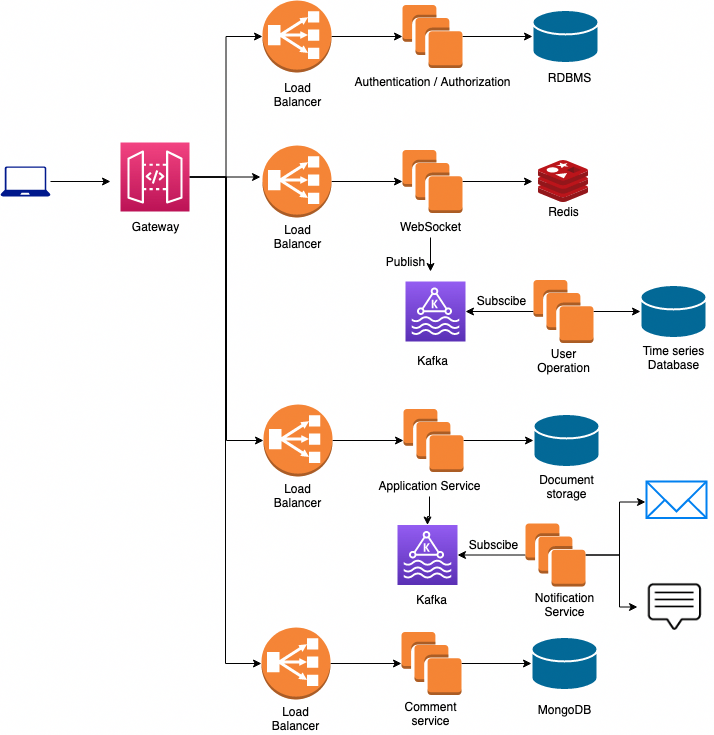

We can think of separate services to support our application.

- Authentication / Authorization service

- Application service

- Websocket service (Lightweight and Fast)

- Notification service

- Comment service

All of these service can be developed and deployed independently behind load balancer. Auto scaling can be done based on different attributes.

Storage decisions

We have plethora of database solutions to choose from. For out implementation, we can have different combination of selection.

- RDBMS (PostgreSQL, MySQL etc.) for user management service

- Document DB(MongoDB, CouchDB, PostgreSQL) for our document storage.

- Redis (For publish subscribe message broker)

- Time series database (InfluxDB) for user operation storage

- Storage for comments can be added in MongoDB

Data stream

For our data stream we can use Apache Kafka. This is an open-source distributed event streaming platform.

Design

Resource

We tried to design a system based on some requirements. In real case this can change as per need. There are lot of resources can be found over internet to scale socket implementation.

Socket.io Using multiple nodes

Scaling a realtime chat app on AWS using Socket.io, Redis, and AWS Fargate

The Road to 2 Million Websocket Connections in Phoenix — Phoenix Blog

600k concurrent websocket connections on AWS using Node.js

10M — goroutines

About this Post

This post is written by Mahfuzur Rahman, licensed under CC BY-NC 4.0.